In my last post about OpenAI and ChatGPT, I showed how we can obtain embedding vectors for individual words from OpenAI’s embeddings endpoint and used these to categorize words. In this post, I will show how we can combine the embedding vectors for individual words in a sentence. This will allow us to cluster or group sentences based on different parameters.

As explained in my last post, embedding vectors are simply vectors – or coordinates – in a embedding space, which is 1500-dimensional for the text-embedding-ada-002 model I will be using in this post. Similar words tend to be placed close together in this 1500-dimensional space.

The notion of ‘close together’ has a specific mathematical meaning: Being three-dimensional creatures, we are not able to visualize a 1500-dimensional space or distances between positions within such a space. However, we can use vector calculus to determine the distance between vectors nevertheless, just as we would do in a two or three-dimensional space. Using Pythagoras’ theorem, what we simply do is add the squared distances on all 1500 axes and then take the square root of the result.

Hence if we have two 4-dimensional vectors v1 = (1, 2, 3, 1) and v2 = (2, -1, 3, 0), we find the distance:

distance(v1, v2) = √((2-1)2 + (2-(-1))2 + (3-3)2 + (0-1)2) = 3.32

Also we can add the two vectors:

v1 + v2 = (1 + 2, 2 + (-1), 3 + 3, 1 + 0) = (3, 1, 6, 1)

Or multiply a vector with a number (scalar):

2*v1 = (2*1, 2*2, 2*3, 2*1) = (2, 4, 6, 2)

When we move into a 1500-dimensional space, we will simply ‘extend’ these methods to cover all 1500 coordinates.

Clustering

Also we can group a list of embeddings vectors into a fixed set of clusters through a process called clustering. In this post, I utilize k-means clustering, an algorithm for clustering vectors into a predefined number of groups based on the squared distance. Using the squared distance simplifies the calculation and have no impact on the cluster assignment.

For example, if I have 5 embedding vectors and I want to group them into two clusters, I can run the vectors through the algorithm. Mathematically, two cluster centers (called cluster centroids) are calculated, and the embeddings are assigned to the clusters based on which cluster centroid is closest.

The process begins by randomly selecting 2 embedding vectors as the initial cluster centroids. Then, the other 3 embeddings are assigned to the closest cluster, and the centroid is recalculated to be placed in the middle of the resulting cluster. Subsequently, a new assignment occurs, potentially moving embeddings from one cluster to the other, and the centroids are recalculated. This iterative process continues until no changes occur.

The algorithm ensures that the centroids are chosen so that the combined squared distance from the embeddings to their respective cluster centroids is minimized. It’s important to note that the position of the centroid is not necessarily a specific embedding. For instance, if two embedding vectors are placed in a cluster, the cluster centroid will be the point exactly halfway between the two embedding vectors.

A practical example

We will now turn to a practical example. Let’s say we have a list of reviews of Italian dishes and pets. The reviews can be either positive or negative.

Logically, we can imagine two ways to group them: based on the noun (whether the review is of an Italian dish or a pet), and on the adjective (whether the review is good or bad).

To keep things simple, we will use a list of reviews like ‘Pizza is good’ and ‘Dog is awful.’ This means that all the reviews will be in the form ‘[NOUN] [VERB] [ADJECTIVE]’. We will use the following nouns: Cat, Dog, Hamster, Spaghetti, Lasagna, and Pizza, and the following adjectives: good, great, horrible, and awful. For the verb, we will always use ‘is.’ These words allow us to generate 24 very simple reviews.

If we obtain the embeddings for the 6 nouns, we can examine the distances between the individual word embeddings using the distance formula described above:

| Cat | Dog | Hamster | Spaghetti | Lasagna | Pizza | |

| Cat | 0 | 0.4932 | 0.5858 | 0.6587 | 0.6653 | 0.5959 |

| Dog | 0.4932 | 0 | 0.6322 | 0.6817 | 0.6872 | 0.5818 |

| Hamster | 0.5858 | 0.6322 | 0 | 0.6299 | 0.6560 | 0.6339 |

| Spaghetti | 0.6587 | 0.6817 | 0.6299 | 0 | 0.4281 | 0.5465 |

| Lasagna | 0.6653 | 0.6872 | 0.6560 | 0.4281 | 0 | 0.5137 |

| Pizza | 0.5959 | 0.5818 | 0.6339 | 0.5465 | 0.5137 | 0 |

Using k-means clustering we can group the nouns into two groups. Not suprising the embedding vectors fall into two welldefined groups.

| Spaghetti 0.279 Lasagna 0.257 Pizza 0.323 |

Cat 0.293 Dog 0.324 Hamster 0.372 |

The numbers below each word representes the distance to the clusters centriod which – remember – is the point in the middle of the group.

If we do the same for the adjectives we get these distances:

| good | great | horrible | awful | |

| good | 0 | 0,4590 | 0.5470 | 0.5676 |

| great | 0,4590 | 0 | 0.5764 | 0,5898 |

| horrible | 0.5470 | 0.5764 | 0 | 0.3338 |

| awful | 0.5676 | 0.5898 | 0.3338 | 0 |

And these two clusters:

| good 0.230 great 0.230 |

horrible 0.167 awful 0.167 |

Clustering reviews

However, to be able to cluster the reviews, we need to be able to combine the embedding vectors for all the words that make up the review. The OpenAI embedding endpoint already supports generating embedding vectors for complete sentences, but for the sake of understanding, let’s say it did not, and we had to figure out a way ourselves.

So, faced with the review ‘Spaghetti is good,’ we need to combine three vectors: vSpaghetti, vis and vgood

The most simple way is to add the three embedding vectors together using simple vector addition:

vreview = vnoun + vverb + vadjective

If we try this we get distances like these:

| Cat is good | Cat is horrible | Spaghetti is good | Spaghetti is horrible | |

| Cat is good | 0 | 0.5470 | 0.6587 | 0.8659 |

| Cat is horrible | 0.5470 | 0 | 0.8464 | 0.6587 |

| Spaghetti is good | 0.6587 | 0.8464 | 0 | 0.5470 |

| Spaghetti is horrible | 0.8659 | 0.6587 | 0.5470 | 0 |

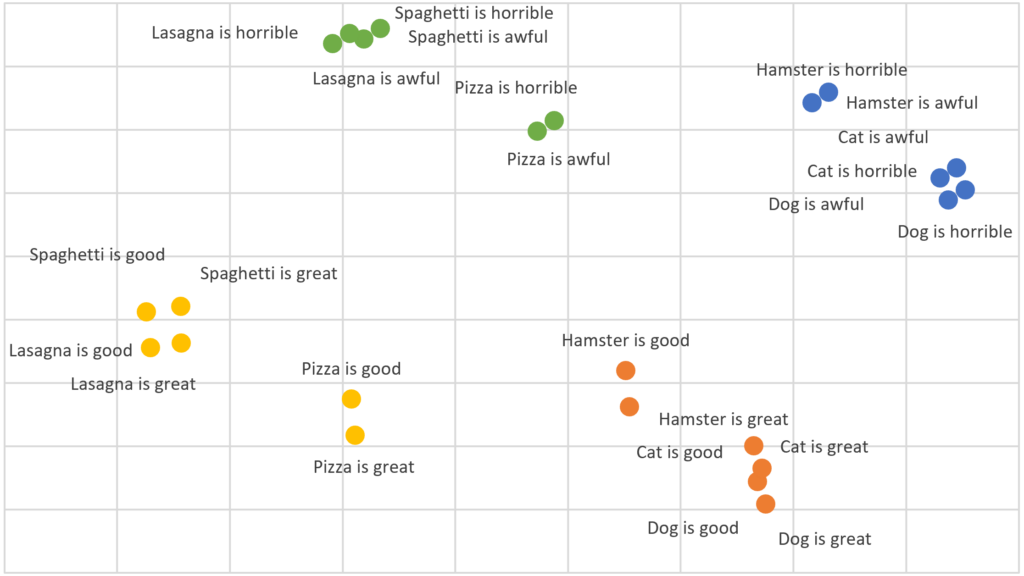

If we group them into 4 clusters we find these groups:

| Cat is good 0.370 Cat is great 0.374 Dog is good 0.396 Dog is great 0.397 Hamster is good 0.439 Hamster is great 0.435 |

Cat is horrible 0.331 Cat is awful 0.343 Dog is horrible 0.357 Dog is awful 0.372 Hamster is horrible 0.419 Hamster is awful 0.395 |

| Spaghetti is good 0.365 Spaghetti is great 0.357 Lasagna is good 0.351 Lasagna is great 0.339 Pizza is good 0.388 Pizza is great 0.405 |

Spaghetti is horrible 0.330 Spaghetti is awful 0.320 Lasagna is horrible 0.305 Lasagna is awful 0.308 Pizza is horrible 0.361 Pizza is awful 0.367 |

As we can see, by simply adding the embedding vectors, we are able to correctly group the reviews based on both the noun and the adjective:

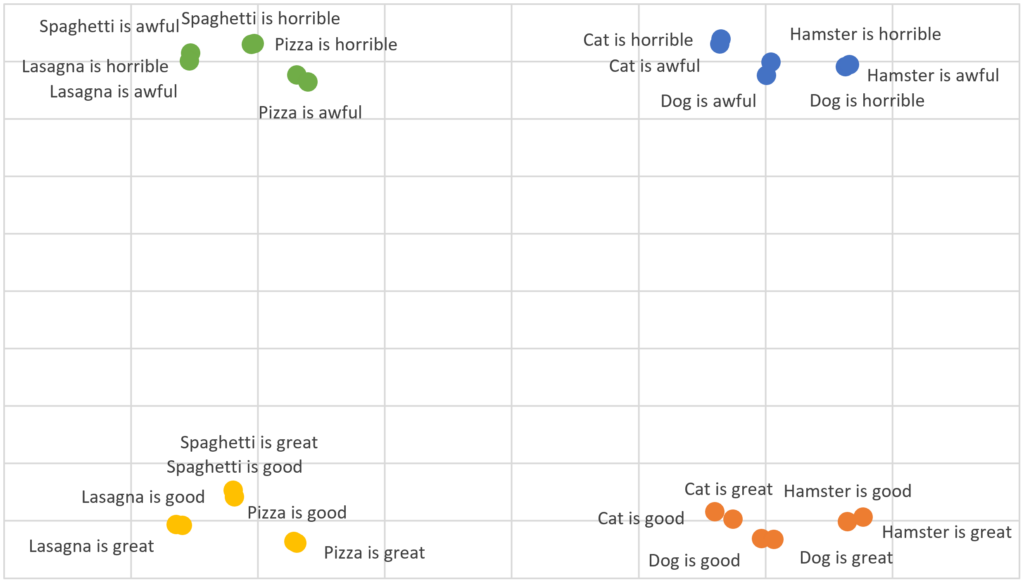

Comparing with the OpenAI model

As mentioned we can also ask the OpenAI model to generate the embeddings for the complete sentences – and the methods used by the OpenAI model is of cause way more advanced than our simple vector addition.

However, the results are actually somewhat similar:

| Cat is good | Cat is horrible | Spaghetti is good | Spaghetti is horrible | |

| Cat is good | 0 | 0.4555 | 0.5614 | 0.6806 |

| Cat is horrible | 0.4555 | 0 | 0.6842 | 0.5316 |

| Spaghetti is good | 0.5614 | 0.6842 | 0 | 0.4328 |

| Spaghetti is horrible | 0.6806 | 0.5316 | 0.4328 | 0 |

The resulting clusters are also the same, albeit with much more well-defined clusters:

| Cat is good 0.292 Cat is great 0.289 Dog is good 0.306 Dog is great 0.311 Hamster is good 0.318 Hamster is great 0.321 |

Cat is horrible 0.272 Cat is awful 0.275 Dog is horrible 0.283 Dog is awful 0.296 Hamster is horrible 0.294 Hamster is awful 0.292 |

| Spaghetti is good 0.273 Spaghetti is great 0.271 Lasagna is good 0.253 Lasagna is great 0.253 Pizza is good 0.293 Pizza is great 0.294 |

Spaghetti is horrible 0.259 Spaghetti is awful 0.249 Lasagna is horrible 0.242 Lasagna is awful 0.239 Pizza is horrible 0.277 Pizza is awful 0.291 |

Weighting embedding vectors

While we have seen that adding word embeddings works in simple cases, the observant reader will notice a significant problem with this approach: word order. When simply adding embedding vectors together, the two sentences ‘Man bites dog’ and ‘Dog bites man’ will produce the same embedding vector, even though the meanings of the two sentences are quite different.

Also all the embedding vectors are all weighted equally. For example, the embedding vector for the verb ‘is’ contributes as much to the combined embedding vector as the embedding vector for the word ‘Spaghetti.

So there is a lot of room for improvement, and in complex language models like those developed by OpenAI, this process is highly sophisticated, employing a combination of different techniques and layers of processing.

POS tagging

However, in this post, I will focus on one improvement that we will need if we want to scale our clustering mechanism to be able to handle more complex reviews: POS tagging.

Let’s say we are faced with a real world review like this: ‘I thought that the spaghetti was really good’. The missing weights now start to be a problem as we now have a lot of words that are irrelevant for our clusters.

So what we really want to do is to boost the noun ‘Spaghetti’ and the adjective ‘good’, as they are the words that is important, and downplay the other words.

So what we want to do is to introduce some weights, for example:

vsentence = 0.5 * vI + 0.5 * vthought + 0.2 * vthat + 0.2 * vthe + 2 * vspaghetti + 0.5 * vwas +

0.5 * vreally + 2 * vgood

However, this approach, of course, requires a process that grammatically tag a sentence’s words and correctly locate the noun and adjective.

This process is called POS (Part-of-Speech) tagging, and fortunately, there are several frameworks available that can help us. Python libraries like Natural Language Toolkit (NLTK) and spaCy will tag words based on their grammatical function. These frameworks take a sentence and produce a list of grammatical tags. For example, using spaCy:

import spacy

# Load the English language model

nlp = spacy.load("en_core_web_sm")

# Define the sentence

text = "I thought that the spaghetti was really good"

# Process the sentence with spaCy

doc = nlp(text)

# Print the tokens and their POS tags

for token in doc:

print(token.text, token.pos_)

The result will be:

I PRON

thought VERB

that SCONJ

the DET

spaghetti NOUN

was AUX

really ADV

good ADJ

Using these POS tags we are able to adjust the weigths to highlight the noun (NOUN) and the adjective (ADJ), and downplay the role of e.g. the pronoun (PRON). This will allow us to finetune the parameters when clustering review, e.g. to focus on the subject or the sentiment of the review.

Some thoughts

In this post we have discussed how individual word embedding vectors can be combined to form embedding vectors for sentences. We saw that adding the indivdual embedding vectors for each word in a sentence together can produce usable results in simple cases, but also discussed some way to refine the process, e.g. by using weights based on POS tags.

Not being a data scientist, my main goal is to understand and explain the broader concepts, and there is a lot of really complex topics that I have skipped. However, I hope that my post was interesting and that I got the big picture right ☺